Robot.txt allow, disallow

The robots.txt file is a simple text file that tells search engine robots (also known as "spiders" or "crawlers") which pages or files on your website they should or shouldn't request. This file is typically placed in the root directory of your website.

The robots.txt file consists of a set of rules, which specify the behavior of robots visiting your site. The most common use of robots.txt is to prevent robots from indexing certain pages or directories that contain sensitive information or are not meant for public consumption.

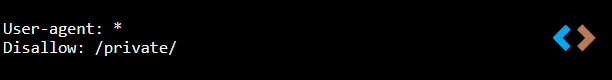

Here's an example of a robots.txt file that disallows all robots from accessing the /private directory on your website:

Copy

Copy

In this example, User-agent: * means that the rules apply to all robots. Disallow: /private/ tells robots not to access any pages or files in the /private directory.

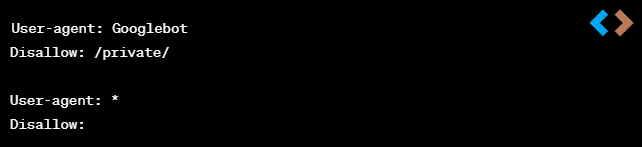

You can also use robots.txt to specify specific rules for specific robots. Here's an example of a robots.txt file that disallows Googlebot from accessing the /private directory, while allowing all other robots to access it:

Copy

Copy

In this example, User-agent: Googlebot specifies the rule only applies to Googlebot. Disallow: /private/ tells Googlebot not to access any pages or files in the /private directory. User-agent: * with an empty Disallow: field means that all other robots are allowed to access everything on the website.

It's important to note that robots.txt is a voluntary agreement between website owners and robots, and not all robots will follow it. Some robots may choose to ignore the rules and crawl your site anyway. Additionally, robots.txt does not prevent a determined attacker from accessing your sensitive information. Therefore, it is recommended to use other security measures in addition to robots.txt to protect your website.